Many organizations have invested significant time and energy into skills—building frameworks, exploring taxonomies, evaluating platforms—yet still struggle to turn those efforts into decisions that actually change how work gets done.

Architecture exists on paper.

Governance exists in theory.

Adoption stalls in practice.

This tension was at the center of our December Skills Roundtable, where HR, Talent, and Learning leaders at very different stages of their skills journey came together to discuss a shared challenge: how to move skills architecture and governance from concept to functional reality.

What emerged was a clear and pragmatic message:

Skills architecture is less about designing the perfect model and more about enabling better business and talent decisions—incrementally, visibly, and with culture in mind.

Below are the key insights, real-world practices, and hard-earned lessons from the discussion.

What We’re Seeing Across Skills Initiatives

Why Skills Initiatives Stall (Even with Good Data)

Participants repeatedly emphasized that while skills is often framed as a data problem, culture determines whether that data ever gets used.

Employees want to know why skills data is being collected and how it will be used.

Confusing skills ratings with performance ratings erodes trust quickly.

Adoption improves dramatically when organizations are explicit about what employees gain in return for sharing data.

Takeaway: Culture, communication, and trust are the biggest constraints to scaling skills—not technology.

Where Skills Actually Take Hold First

Very few organizations are starting enterprise-wide—and those that do often struggle.

Instead, participants shared success starting with:

Hourly and frontline roles, where skills are more task-based and observable

IT and digital teams, where skills change rapidly and business demand is clear

Critical roles, where skills directly differentiate performance

Several organizations are intentionally limiting skills per role (often 6–8) to avoid over-engineering and fatigue.

Best practice: Start where skills are concrete and decision-relevant—not where they are theoretically comprehensive.

Skill Validation: What Works in the Real World

One of the most common sticking points was validation.

How do we validate skills without creating something expensive, slow, or impossible to scale?

The shared reality:

Self-assessment is the most common starting point, and often sufficient initially

More formal validation (manager review, observation, assessments) should be reserved for business-critical skills

Validation rigor should match risk and use case, not ideology

Practical insight: Most organizations need data flow before they need perfect data.

Taxonomy vs. Ontology: Why Most Orgs Start Simple

The group explored the difference between:

Taxonomies (hierarchical skill structures)

Ontologies (networked relationships across skills, roles, learning, and mobility)

Key observations:

Most organizations today operate with a taxonomy—often unintentionally

Ontologies unlock advanced use cases like career mobility, skill adjacency, and AI-driven recommendations

Many see taxonomy as a foundation, not a limitation

Insight: This isn’t a binary choice. Most organizations evolve toward ontology only when the use case demands it.

The Hidden Cost of Disconnected Skills Work

A pattern surfaced across organizations, regardless of maturity:

Skills work is happening—in learning, TA, workforce planning, succession

Data lives in silos (often spreadsheets)

Pilots are rarely connected or reused

This fragmentation slows momentum and increases rework.

Early governance win: Create visibility across skills efforts—not control—to reduce duplication and accelerate learning.

Real-World Practices Highlighted

Where organizations are starting

Piloting with critical roles, frontline teams, or IT functions

Defining a small set of future-focused skills (one organization defines ~200 at the enterprise level)

How skills are being defined

Leveraging public libraries (O*NET, ESCO, NESTA, Open Skills Network)

Using Lightcast and HRIS-embedded skill data as a baseline

Applying generative AI to standardize and clean definitions

How validation is evolving

Starting with self-assessment

Introducing manager or formal validation selectively

How technology is being approached

Delaying vendor selection until use cases are clear

Maximizing existing HRIS and learning platforms first

Treating skills architecture as an ecosystem, not a single tool

Common Barriers Organizations Are Navigating

Analysis paralysis around architecture and governance

Overly complex skill models that never get used

Employee skepticism about how skills data will be applied

Fragmented ownership across HR, Talent, and Learning

Trying to scale before proving value through pilots

Advice That Resonated Most

Use the minimum effective dose of skills architecture

Limit skills per role to what truly differentiates performance

Keep skills and performance ratings clearly distinct

Leverage what you already have before buying new tech

Treat governance as lightweight and evolving

Anchor every skills decision to a business problem, not a framework ideal

Notable Quotes from the Discussion

Nichole, Mid-size Construction Supply Firm: “Hourly roles were the easiest place to start—skills are already how the work gets done.”

Amanda, Federal Credit Union: “We finally realized we just needed to let people self-select to get data flowing.”

Kelly, Utilities Engineering Firm: “The hardest part isn’t defining skills—it’s deciding how formal validation needs to be.”

Amanda: “Thinking about skills as connected—not isolated—changed how I see learning paths.”

Who This Is Especially Relevant For

This will resonate if you’re:

Leading or supporting a skills-based initiative

Running pilots that aren’t yet connected

Facing analysis paralysis around architecture or governance

Being asked to provide clarity, priorities, or a roadmap

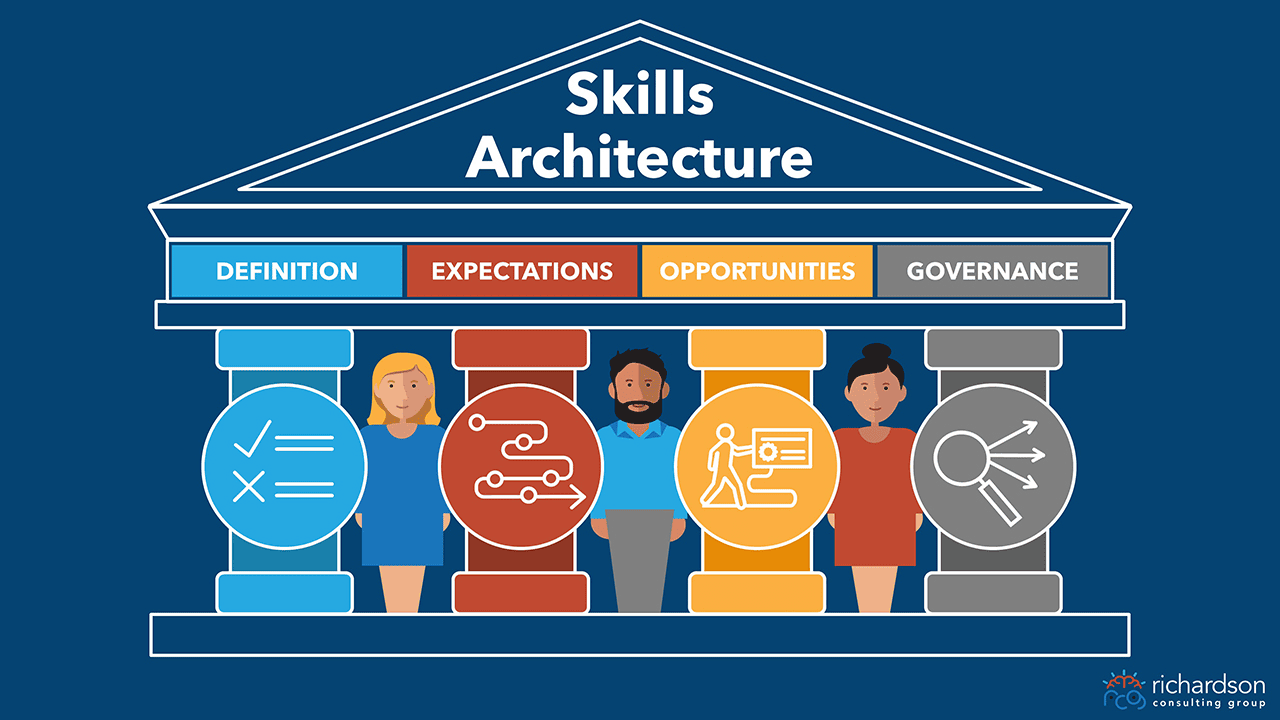

Turning Insight into Action: The Skills Readiness Snapshot

If this discussion sounds familiar, you’re likely past curiosity but not yet confident—aware of the opportunity skills represent, but unsure where to focus, how much rigor is enough, or how to align stakeholders.

That’s exactly where the Skills Readiness Snapshot helps.

What It Includes

A 45-minute working session

Benchmarking across six critical capabilities:

Culture

Skills Architecture

Skills Data

Partnerships and Governance

Analytics

Technology

Concrete, prioritized next steps

A leader-ready executive briefing to support buy-in

This is the same starting point we use with Fortune 500 clients before larger skills strategy engagements—and it’s complimentary.